Python Memory Management

What happens under the hood, lets go …

Help out the underdog! Beat the Cybercrime learn how.

Let me start by saying, Python is a dynamically typed language and everything in Python is an object. What we get in return are the references to such objects.

With an example:

x = 10

print (“x type is : ”, type(x))----

x type is : <class 'int'>

Here, x is a reference variable to an integer object 10.

Another reference variable y is being created, which again points to integer object 10.

y = xCheck their memory addresses using function id().

print(“Integer object 10 referenced by variable x”, hex(id(x)))

print(“Integer object 10 referenced by variable y”, hex(id(y)))----

Integer object 10 referenced by variable x 0x10c417a50

Integer object 10 referenced by variable y 0x10c417a50

It suggests that both x and y reference variables are referring to the same integer object 10.

Now, what if I change the object value using a variable x, would it also reflect back the same to variable y?

x = x + 1

print(“Integer object 10 referenced by variable x”, hex(id(x)))----

Integer object 10 referenced by variable x 0x10c417a70

Now, this is nothing about reference variable y, please do not presume that as y is also referring to 10, that is the reason x must create a new object such that the changes won’t affect y.

The fact is even if y doesn’t exist and if we execute x = x + 1, the result would have been in creation of a new integer object. We can test this fact …

To further qualify my statement, let us create another reference variable z and assign the value as 10.

z = 10

print(“Integer object 10 referenced by variable z”, hex(id(z)))

print (“z type is : ”, type(z))----

Integer object 10 referenced by variable z 0x10c417a50

z type is : <class 'int'>

z has the same memory address as that of y, which means they are pointing to the same integer object 10. This is because python optimises memory utilization by assigning the same object to the new reference variable if it already exists in the memory with the same value.

Now, time to qualify my other statement about python being dynamically typed.

Let us say, in the middle of a program developer modify reference variable z to point to some other object type,

Is that a valid operation?

z = “python is fun”

print (“z type is : ”, type(z))----

z type is : <class 'str'>

Wow! Reference variable z has changed its cast from integer class to string class. That means we need not to specify the datatype for a reference variable either during creation or later in the program.

You must be wondering how all this magic happens in the background?

Python doesn’t compile our python code before execution but rather python is a compiled program in itself (called Python Interpreter) that executes your Python code line by line, parses it, converts to the bytecode and runs.

On Linux: root@fcaea7310d7f:~# file /usr/bin/python3.6

/usr/bin/python3.6: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, BuildID[sha1]=fc614aa299a924960da33b875fb9cfaa641ea5bc, strippedOn Macbook:file /usr/local/bin/python3

/usr/local/bin/python3: Mach-O 64-bit executable x86_64

When Python as an interpreter runs on an operating system, it must be running just like any other program, isn’t it? This means its an ELF file with a system defined program memory model and the system does not provide such dynamic behaviour or memory management.

Python must be doing some memory management for us so that we can achieve dynamic typing and completely ignoring memory allocation and deallocation for our code?

The answer is yes, and following section deals with this part:

If you understand the memory model even at a high level, you will know that during runtime, a system can utilize two types of memory segments: Stack segment and Heap segment. Ideally heap is the only dynamic allocation segment that a program can request form, stack is used for function and method execution. On linux system, dynamic allocation happens via calling brk()/sbrk() system calls, that returns pages, its upto the application to maintain and do accounting for this received memory. Anyway, for now let us proceed.

With an example code let us try to understand it:

def bar(a):

a = a - 1

return adef foo(a):

a = a * a

b = bar(a)

return bdef main():

x = 2

y = foo(x)if __name__ == "__main__":

main()

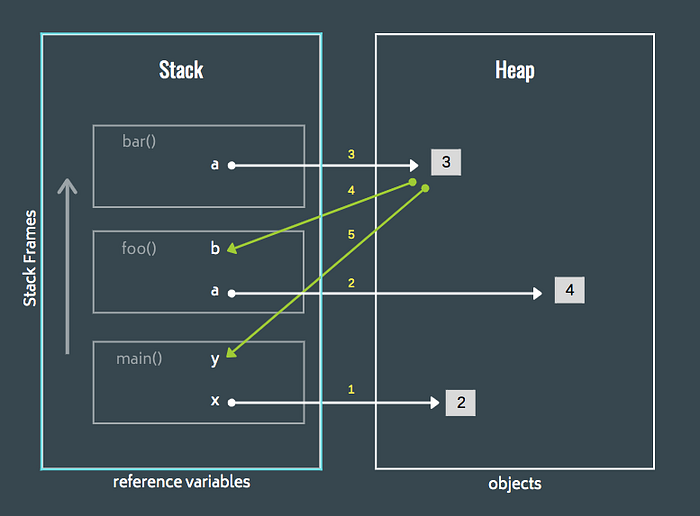

Program stack or call stack is created, reference variables go on to the stack as local variables and arguments but the objects are allocated from the heap. Yes, every new object from the heap and in Python everything is an object. I guess you got the hint, why python is slow, as dynamic memory allocation is often one of the costliest system operations and Python is keep doing it all the time. The Python interpreter has no clue when the developer next asks for memory and for how much, hence memory pool won’t work much.

Anyway let us first understand line by line working of the above code and later memory optimization techniques. As shown below the sequence of stack events, functions get called (pushed on to stack) and return (popped from stack), they allocated objects in the heap.

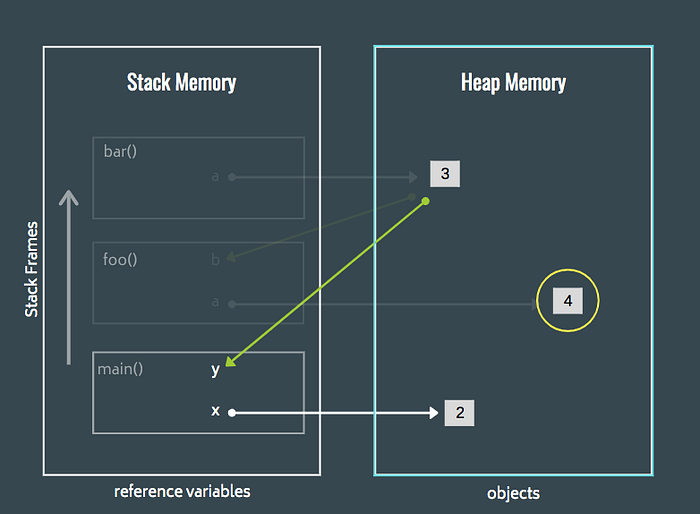

In the below figure, as foo() and bar() goes out of scope (popped from the stack), they left over some heap memory with stall objects and did not bother to deallocate them. That's not quite graceful?

Python has a built in resource manager called garbage collector, it keeps counts of in-use memory and post-use memory references before garbage collecting it.

import sys

import weakrefclass Color():

def __init__(self, r, g, b):

self.red = r

self.green = g

self.blue = bclr = object()

print("The ref count to object clr : ", sys.getrefcount(clr))----

The ref count to object clr : 2

the reference count is as 2, as the function also has a reference (temporary) to the object when called. Create another reference variable for same object.

clr1 = clr

print("The ref count to object clr : ", sys.getrefcount(clr))----

The ref count to object clr : 3

try deleting one reference:

del clr1

print("The ref count to object clr : ", sys.getrefcount(clr))----

The ref count to object clr : 2

Now, you wonder why I didn’t take a simple example of int or float or str instead created a class to show the reference count? We can also do the same for int or float or str as they are class too in python, let us try:

var = 9

print("The ref count to object integer 9 : ", sys.getrefcount(var))----

The ref count to object integer 9 : 18

What is that?

Please run it on your machine and you may see different values. This is because internally Python is using integer value 9 at 18 different places. Let us check it once again:

var2 = 9

print("The ref count to object integer 9 : ", sys.getrefcount(var))----

The ref count to object integer 9 : 19

It is also clear how Python optimizes and doesn’t create another heap allocation for storing integer value 9. When such references to heap memory reaches to 0, Python runs garbage collection and deallocates it.

In the next part, will deep dive into Grabage collector internals, but would be nice if you read through how brk()/sbrk() works and followed by how malloc() and calloc() works in C, they use Bestfir, Firstfit, Lastfirt, Buddy like algorithms to sanitize memory fragments.

Until the next post, stay safe and healthy …

References: